100% focus on the essential task…

…thanks to the MXcarkit. The success story from a model car at a student competition to a top tool in research and development has already been told. In this blog post, we will use an application example to show what can be extracted from the perfectly equipped model cars, both among students in research institutes and in the R&D department of an OEM.

The MXcarkits are modified vehicles originally from model construction that do much more than remotely control driving and parking situations. By incorporating sensor technology and a real-time computer, they have real computing power on board and can thus master automated driving situations.

Tasks: Parking space recognition and environment mapping

Known from the VDI Cup on Autonomous Driving (VDI ADC), the MXcarkit serves to develop further additional essential functions. Like in this case the development of a parking detection and environment mapping.

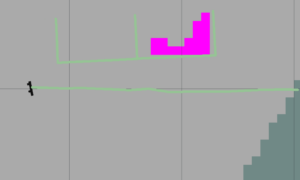

In other words, these are the first two steps towards a fully automated parking function. The research group at the Adrive Living Lab of the Kempten University of Applied Sciences, which was specifically called upon for this topic, introduced a procedure so that the vehicle can automatically recognize parking space markings in the first step and thus determine the position of the parking spaces. With the second step, the generation of a map, it is able to check whether these parking spaces are occupied or free.

The MXcarkit comes with the necessary sensor technology

The MXcarkit comes with the necessary features for these findings: the front stereo camera and ten ultrasonic sensors are used for the vehicle’s environment sensors. The NVIDIA Jetson, which is also installed in the Carkit, takes over the tasks of a powerful computer, precisely for robotics applications and AI. While ROS (Robot Operating System) is used for the fast and efficient realization of the software.

The MXcarkit is therefore fully equipped: Using the sensor technology available on the vehicle, parking space detection can be realized. Using front camera images, it detects parking space markings and converts their image coordinates into vehicle coordinates. An environment map is required so that the vehicle can park. This is used to avoid dynamic and static objects and to detect the occupancy of parking spaces. For this purpose, widely used ROS packages for sensor data fusion and Simultaneous Localization and Mapping (SLAM) are used in addition to a self-developed parking space detection system.

The advantage of working with MXcarkit is clear. The hardware is completely built in, the interfaces are in place and the code is written to address the sensors. The engineer can focus 100% on the essential task, like detecting the parking lot in this application example and calculating the position of the vehicle to the parking lot.

There is more to come!

Right now, a research group is working on the final step of fully automated parking using trajectory planning. And on improving vehicle-odometry estimation using sensor data fusion. We will report on the results. Stay tuned!

For detailed information, feel free to contact Prof. Dr. Markus Krug markus.krug@mdynamix.de or Johann Haselberger johann.haselberger@hs-kempten.de